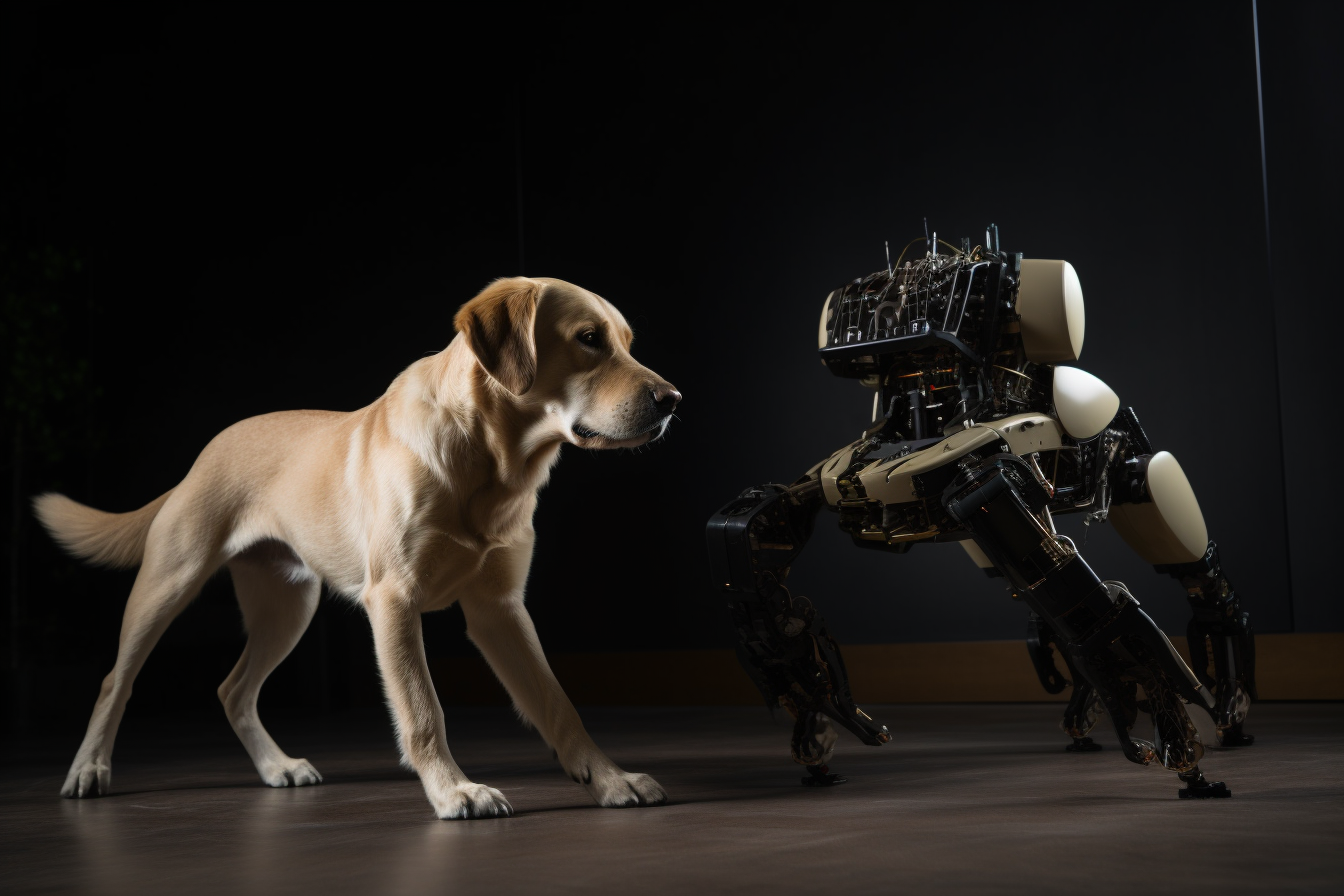

Imagine a future where robots can effortlessly mimic the agility and poise of animals and humans, navigating complex terrains with ease. This may seem like science fiction, but with the advent of the revolutionary SLoMo framework, this future is becoming increasingly plausible.

What is SLoMo?

SLoMo, short for "Skilled Locomotion from Monocular Videos," is a pioneering method that empowers legged robots to replicate animal and human motions by transferring these skills from casual, real-world videos. This innovative approach surpasses traditional motion imitation techniques, which often require expert animators, collaborative demonstrations, or expensive motion capture equipment.

The SLoMo Framework

The SLoMo framework operates in three stages:

Stage 1: Synthesis of Physically Plausible Key-Point Trajectory

In this stage, a physically plausible reconstructed key-point trajectory is synthesized from monocular videos. This involves analyzing the video footage and extracting key points that capture the essential motion characteristics.

Stage 2: Offline Optimization of Reference Trajectory

In this stage, a dynamically feasible reference trajectory is optimized offline for the robot. This includes body and foot motion, as well as contact sequences that closely track the key points extracted in Stage 1. The goal is to create a realistic and accurate representation of the desired motion.

Stage 3: Online Tracking using Model-Predictive Controller

In this final stage, the reference trajectory is tracked online using a general-purpose model-predictive controller on robot hardware. This ensures that the robot can execute the desired motion with precision and adapt to changing conditions.

Successful Demonstrations and Comparisons

The SLoMo framework has been successfully demonstrated across various hardware experiments on a Unitree Go1 quadruped robot and simulation experiments on the Atlas humanoid robot. These results showcase the versatility and robustness of this approach, which can handle unmodeled terrain height mismatch on hardware and generate offline references directly from videos without annotation.

Limitations and Future Work

Despite its promising outcomes, SLoMo does have limitations that need to be addressed:

Key Model Simplifications and Assumptions

The current implementation of SLoMo relies on simplifications and assumptions in the key model, which may not accurately capture complex motion characteristics. Future research should focus on refining these models to improve accuracy.

Manual Scaling of Reconstructed Characters

The scaling process for reconstructed characters is currently manual, which can be time-consuming and prone to errors. Automating this process will be crucial for practical deployment.

Extending the Work to Full-Body Dynamics

To further enhance the framework’s capabilities, extending the work to include full-body dynamics in both offline and online optimization steps would significantly improve its accuracy and robustness.

Investigations and Deployments

Future research should also explore:

- Automating the scaling process and addressing morphological differences between video characters and corresponding robots

- Investigating improvements and trade-offs by using combinations of other methods in each stage of the framework, such as leveraging RGB-D video data

- Deploying the SLoMo pipeline on humanoid hardware, imitating more challenging behaviors, and executing behaviors on more challenging terrains

Conclusion

SLoMo is a groundbreaking framework that has the potential to revolutionize robot locomotion and motion imitation. Its ability to transfer skills from casual videos makes it an attractive solution for various applications, from search and rescue to entertainment. As this technology continues to evolve, we can expect robots to seamlessly blend in with their natural counterparts, unlocking new possibilities for human-robot collaboration.

References

- John Z. Zhang et al., "SLoMo: A General System for Legged Robot Motion Imitation from Casual Videos," Carnegie Mellon University (2023). arXiv:2304.14389